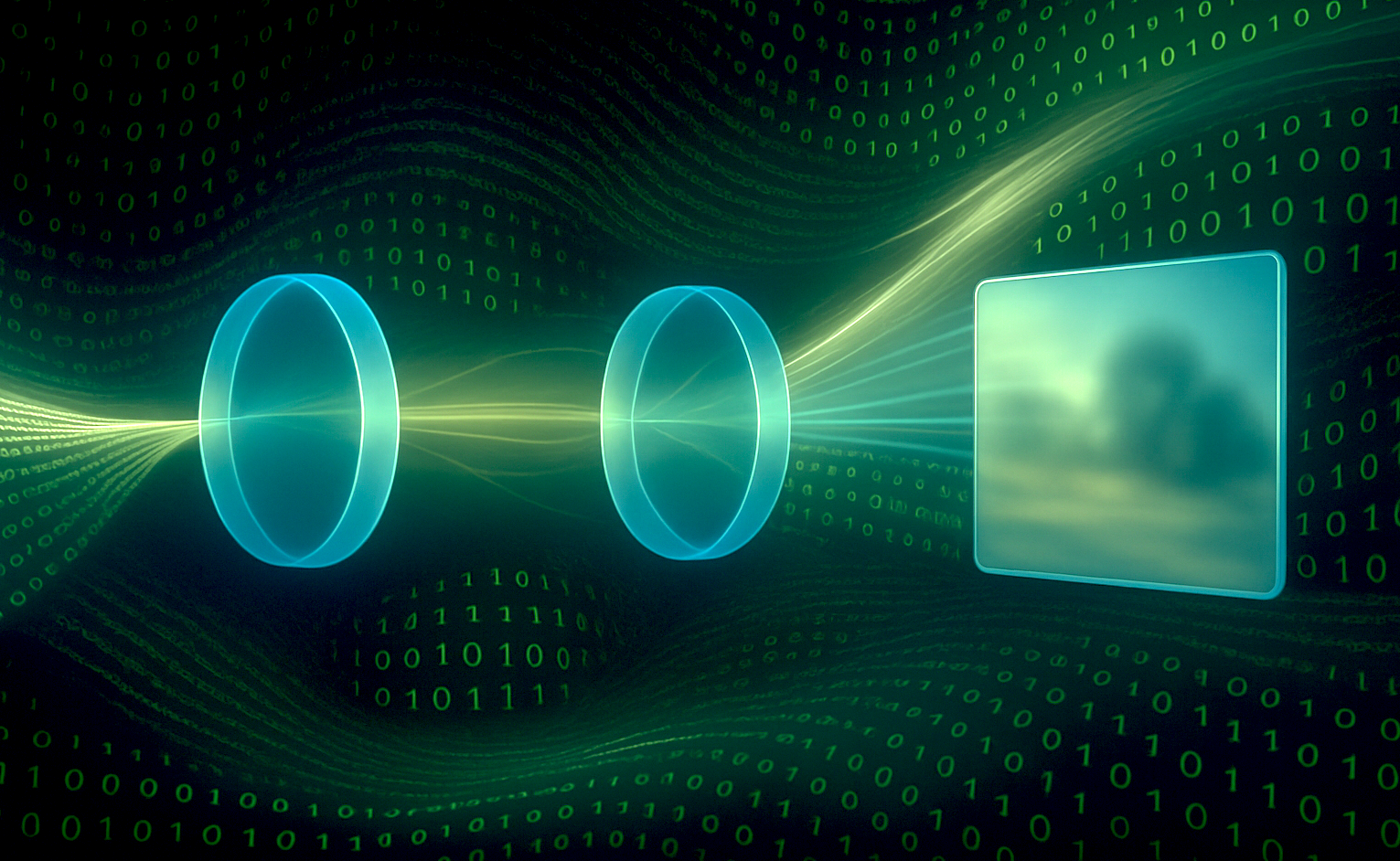

I am a transdisciplinary researcher dedicated to exploring, quantifying, understanding, and manipulating light radiation through a synergistic combination of hardware and software. My research focuses on computational optical imaging and display across multiple domains: 3D (complex wave fields and volumetric scenes) and 4D (space-time).

I pursue this vision by embedding computation directly into acquisition and processing pipelines—through novel hardware designs, advanced algorithms, and their seamless integration. I develop generalizable tools that address fundamental challenges in optical imaging through a progressive four-stage approach:

- Theory: Rigorous formulations of the underlying physical phenomena

- Numerical modeling: Establishing forward models of physical systems and exploring the linkages among key imaging components

- Inverse computation: Solving forward models to recover object internal states

- Applications: Facilitating cross-disciplinary implementations while accommodating domain-specific constraints

My overarching objective is to bridge the gaps among core components of computational imaging systems, thereby overcoming the limitations of fragmented co-design. This endeavor encompasses:

- Differentiable Imaging: Developing uncertainty-aware, end-to-end optimized optical imaging systems

- Advancing imaging modalities: Extending holography, light field imaging, coherent diffraction imaging, ptychography, and microscopy for robust 3D and 4D imaging—exploring the physical and computational boundaries of imaging systems and pushing beyond them

By weaving together theory, modeling, computation, and application, my goal is to unlock novel capabilities in how we capture, process, and visualize light-based information—ultimately enhancing our capacity to perceive and understand the universe around us.

News

-

微信公众平台 当成像系统学会'学习':化物理不确定性为数字智能

-

爱科学 可微分成像解锁全链路优化

-

今日科学 可微分成像解锁全链路优化

-

科学网 可微分成像解锁全链路优化

-

小红书 港大工程学院又爆科研新突破!

-

京港學術交流中心 可微分成像 + 傅里葉疊層成像的突破性應用